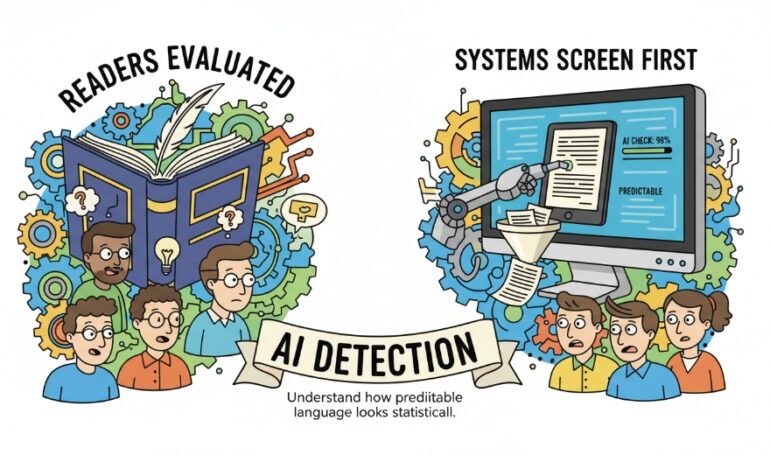

Writing used to be evaluated by readers. Now it is often screened by systems first. That quiet shift has changed how people approach even ordinary drafts, especially in academic, professional, and SEO-driven contexts.

Because of this, many writers now run finished work through an AI Checker before worrying about audience response. The goal is not to disguise intent, but to understand how predictable the language looks when judged statistically rather than contextually.

The Real Problem Is Not AI Usage

It is misclassification

Most people using detection tools are not hiding AI assistance. They are protecting themselves from being flagged incorrectly. When writing is clean, neutral, and efficient, it can resemble machine output even if every sentence was written manually.

This uncertainty changes behavior. Writers no longer ask only whether something is correct, but whether it appears too correct.

Caution replaces confidence

As a result, some writers hesitate to revise thoroughly. They leave in redundancy or soften conclusions, fearing that clarity might increase detection scores. This creates a strange incentive to under-edit.

Detection anxiety becomes part of the writing process itself.

Why Polished Text Often Scores Higher

Predictability matters more than quality

AI detection systems do not judge insight or originality. They identify patterns: sentence length, transition density, structural regularity.

Well-edited explanatory writing often follows stable patterns because that is how humans are taught to write clearly. Unfortunately, clarity and predictability frequently overlap.

Templates amplify the issue

Educational and marketing templates worsen the problem. When thousands of articles follow similar outlines, even human-written content starts to look statistically familiar.

This is where detection tools become diagnostic rather than accusatory.

How Writers Should Actually Use AI Detection

Detection belongs after thinking

Running an AI checker on half-formed ideas produces meaningless results. Early drafts are naturally uneven. The useful moment is after structure and argument are settled.

At that stage, detection feedback can highlight where language has become too uniform.

Focus on sections, not scores

Overall percentages are less helpful than sectional patterns. If one paragraph consistently scores higher than others, it often signals abstract summarization rather than concrete reasoning.

Revision then becomes targeted, not defensive.

Where Dechecker Fits in Practice

It highlights over-optimized language

Dechecker is most useful when it draws attention to language that sounds professionally correct but intellectually empty. These are usually passages that explain without committing to a position.

Adding context, limitations, or personal observation often lowers detection naturally.

It supports revision, not avoidance

The strongest edits prompted by detection are additive. Writers expand reasoning instead of distorting grammar. This keeps the text credible for humans and less uniform for systems.

That balance is difficult to achieve without feedback.

Detection Beyond Written Text

Transcribed speech has similar problems

Spoken content converted into text often becomes unnaturally smooth. Pauses disappear, repetitions are cleaned up, and sentence boundaries are standardized.

If interviews or lectures are processed through an audio to text converter, the resulting text can resemble AI-generated prose even though the source is entirely human.

Detection helps identify where that transformation becomes too aggressive.

Editing needs restraint

Light revision preserves voice. Heavy normalization removes it. Detection tools reveal when that line has been crossed.

Academic and Professional Pressure

Rules are unclear, consequences are not

Institutions often lack precise AI policies, but enforcement still happens. This uncertainty drives writers to self-check excessively.

An AI checker becomes a way to separate content quality from compliance anxiety.

Strong argumentation lowers risk

Interestingly, analytical sections usually score as more human. Detection does not punish thinking. It flags fluent emptiness.

This reinforces good writing habits rather than undermining them.

What Detection Tools Cannot Decide

They do not judge intent

A high AI score does not prove misuse. A low score does not prove originality. Treating results as verdicts leads to bad decisions.

Detection should inform revision, not replace judgment.

They cannot replace ownership

Writers must still stand behind their work. Tools reveal patterns, not purpose.

Dechecker works best when used as a second read, not a final authority.

Writing Forward

Human writing is contextual

It reflects perspective, constraint, and choice. These qualities emerge from thinking, not from stylistic tricks.

Detection tools respond well to that depth because it disrupts uniformity naturally.

The goal is awareness, not disguise

An AI Checker is not about hiding. It is about understanding how writing appears when stripped of context.

Used carefully, Dechecker helps writers keep fluency without losing intention.